Evaluation Data

The following datasets were converted to standard evaluation Prompts before being reviewed

MATH-hard

Data description:

The Mathematics Aptitude Test of Heuristics (MATH) dataset contains problems from math competitions such as AMC 10, AMC 12, and AIME. Each problem in the MATH dataset is accompanied by a detailed solution.This time, we use the HARD subset of it from the official lm-eval-harness integration, which contains only level 5 questions from the original data.

Dataset composition and specification:

Source data volume:

There are 1,324 questions of level 5 in the original data.

Data Segments:

| KEYS | EXPLAIN |

|---|---|

| problem | Math competition problem |

| solution | Detailed solution steps |

| level | The difficulty level of the problem ranges from "Level 1" to "Level 5". Level 1 is the easiest, and Level 5 is the hardest. |

| type | The subject category of the problem: Algebra, Counting & Probability, Geometry, Intermediate Algebra, Number Theory, Prealgebra, and Precalculus |

Sample of source dataset:

{

"problem": "John draws a regular five pointed star in the sand, and at each of the 5 outward-pointing points and 5 inward-pointing points he places one of ten different sea shells. How many ways can he place the shells, if reflections and rotations of an arrangement are considered equivalent?",

"level": "Level 5",

"type": "Counting & Probability"

}Paper Citation:

MATH: https://arxiv.org/abs/2103.03874

@inproceedings{hendrycks2021MATH,

author = {Hendrycks, Dan and Burns, Collin and Kadavath, Saurav and Arora, Akul and Basart, Steven and Tang, Eric and Song, Dawn and Steinhardt, Jacob},

booktitle = {Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks},

title = {Measuring Mathematical Problem Solving With the MATH Dataset},

volume = {1},

year = {2021}

}Dataset Copyright Usage Instructions:

MIT License

GPQA

Data description:

Google-Proof Q&A (GPQA) is a set of specialised questions created by experts in the fields of biology, physics and chemistry, so named because non-experts can only achieve 34% accuracy through unlimited web searches. The data consists of 546 multiple-choice questions, including 448 in the main set and 198 in the most challenging ‘diamond’ subset.

Sample source data set:

{

'Question': 'Two quantum states with energies E1 and E2 have a lifetime of 10^-9 sec and 10^-8 sec, respectively. We want to clearly distinguish these two energy levels. Which one of the following options could be their energy difference so that they can be clearly resolved?',

'Subdomain': 'Physics (general)',

'Correct Answer': '10^-4 eV',

'Incorrect Answer 1': '10^-11 eV',

'Incorrect Answer 2': '10^-8 eV',

'Incorrect Answer 3': '10^-9 eV',

'Explanation': 'According to the uncertainty principle, Delta E* Delta t=hbar/2. Delta t is the lifetime and Delta E is the width of the energy level. With Delta t=10^-9 s==> Delta E1= 3.3 10^-7 ev. And Delta t=10^-11 s gives Delta E2=3.310^-8 eV. Therefore, the energy difference between the two states must be significantly greater than 10^-7 ev. So the answer is 10^-4 ev.'

}Paper Citation:

GPQA: https://openreview.net/forum?id=Ti67584b98

@inproceedings{

rein2024gpqa,

title={{GPQA}: A Graduate-Level Google-Proof Q\&A Benchmark},

author={David Rein and Betty Li Hou and Asa Cooper Stickland and Jackson Petty and Richard Yuanzhe Pang and Julien Dirani and Julian Michael and Samuel R. Bowman},

booktitle={First Conference on Language Modeling},

year={2024}

}TheoremQA

Data description:

TheoremQA contains 800 high-quality professional Q&A data, proposing around a total of 350 well-known theorems (e.g., Taylor's Theorem, Lagrange's Theorem, Quantum's Theorem, etc.) under the discipline of Science and Technology.

Dataset composition and specification:

Assessment Data Volume:

The test set contains 800 items of data for evaluation.

Data Segments:

| KEYS | EXPLAIN |

|---|---|

| Question | Question |

| Answer | Answer |

| Answer_type | Type of the answer |

| Picture | Image (if available) |

Sample from the source dataset:

{'Question': 'How many ways are there to divide a set of 8 elements into 5 non-empty ordered subsets?',

'Answer': '11760',

'Answer_type': 'integer',

'Picture': ''}paper citation:

TheoremQA: https://arxiv.org/abs/2305.12524

@inproceedings{chen2023theoremqa,

title = "{T}heorem{QA}: A Theorem-driven Question Answering Dataset",

author = "Chen, Wenhu and

Yin, Ming and

Ku, Max and

Lu, Pan and

Wan, Yixin and

Ma, Xueguang and

Xu, Jianyu and

Wang, Xinyi and

Xia, Tony",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

year = "2023",

pages = "7889--7901"

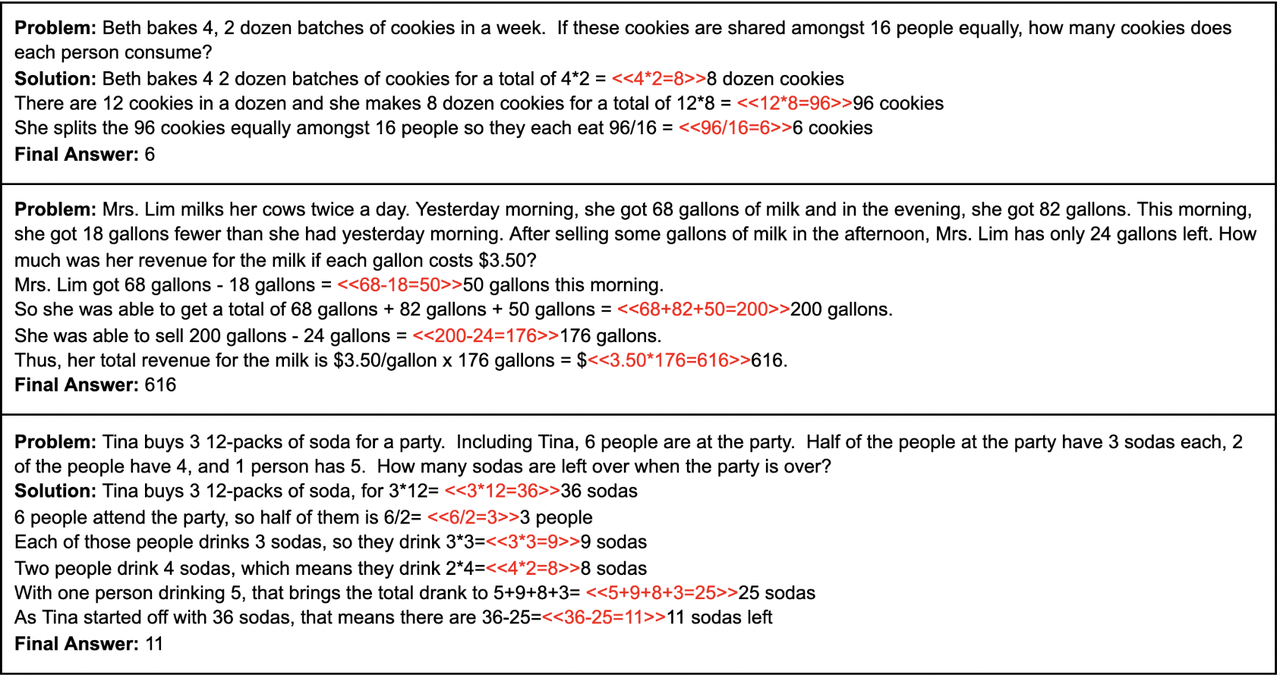

}GSM

GSM-8K:https://github.com/openai/grade-school-math

Data description:

The GSM8K dataset, released by OpenAI, is designed to evaluate and improve the ability of large language models to solve mathematical word problems. The dataset contains 8.5K high-quality elementary school math problems, carefully crafted by human problem writers. It is divided into 7.5K training problems and 1K test problems. These problems typically require 2 to 8 steps to solve, with the solutions involving a sequence of basic arithmetic operations (addition +, subtraction -, division /, multiplication *) to progressively compute the final answer. A bright middle school student should be able to solve all of these problems.

The raw data files can be found in:

- grade_school_math/data/train.jsonl

- grade_school_math/data/test.jsonl Each line of those files corresponds to a single grade school math problem, saved as a json dictionary (with a "question" key and an "answer" key). The answer is formatted such that it uses calculation annotations and so that the final numeric solution is the final line of the solution, preceded by ####.

Sample question for the source dataset:

paper citation:

GSM: https://arxiv.org/abs/2110.14168

@article{cobbe2021gsm8k,

title={Training Verifiers to Solve Math Word Problems},

author={Cobbe, Karl and Kosaraju, Vineet and Bavarian, Mohammad and Chen, Mark and Jun, Heewoo and Kaiser, Lukasz and Plappert, Matthias and Tworek, Jerry and Hilton, Jacob and Nakano, Reiichiro and Hesse, Christopher and Schulman, John},

journal={arXiv preprint arXiv:2110.14168},

year={2021}

}Dataset Copyright and Usage Instructions:

MIT License

Copyright (c) 2021 OpenAI

AIME 2024(MATH)

link:https://huggingface.co/datasets/Maxwell-Jia/AIME_2024#aime-2024-dataset

Data description:

This dataset contains 30 high-difficulty original English problems from the 2024 American Invitational Mathematics Examination (AIME), provided in JSONL format. It covers a wide range of mathematical domains, including geometry, algebra, and number theory, and includes complete and detailed solution steps, making it suitable for evaluating models' advanced multi-step mathematical reasoning and creative thinking abilities.

Sample from the source dataset(Simplified):

2024-II-4

Question: Let $x,y$ and $z$ be positive real numbers that satisfy the following system of equations:

\[\log_2\left({x \over yz}\right) = {1 \over 2}\]

\[\log_2\left({y \over xz}\right) = {1 \over 3}\]

\[\log_2\left({z \over xy}\right) = {1 \over 4}\]

Then the value of $\left|\log_2(x^4y^3z^2)\right|$ is $\tfrac{m}{n}$ where $m$ and $n$ are relatively prime positive integers. Find $m+n$.

Solution:

Denote $\log_2(x) = a$, $\log_2(y) = b$, and $\log_2(z) = c$.

Then, we have:

$a-b-c = \frac{1}{2}$,

$-a+b-c = \frac{1}{3}$,

$-a-b+c = \frac{1}{4}$.

Now, we can solve to get $a = \frac{-7}{24}, b = \frac{-9}{24}, c = \frac{-5}{12}$.

Plugging these values in, we obtain $|4a + 3b + 2c| = \frac{25}{8} \implies \boxed{033}$.Copyright Notice

Source: AIME 2024 I & II

License: MIT License

Copyright © [year] [fullname]