Evaluation Data (Multi-Task Learning)

PASCAL-Context

#Metrics-mIoU,maxF,mErr,MTL gain

Data description:

PASCAL-Context is a commonly used dataset for multi-task collaborative tasks. It contains 10,103 pairs of original images and data annotations, where the data annotations cover four tasks: semantic segmentation, human part segmentation, saliency detection, and surface normal estimation. The training set, validation set, and test set contain 4,998, 2,607, and 2,498 samples respectively.

Dataset structure:

Amount of source data:

The dataset is divided into auxiliary training set (4,998), validation set (2,607), and test set (2,498).

Amount of test data:

All 2,498 test examples from the source dataset test set.

Data detail:

Raw image:RGB image

Annotation maps containing information such as semantics.

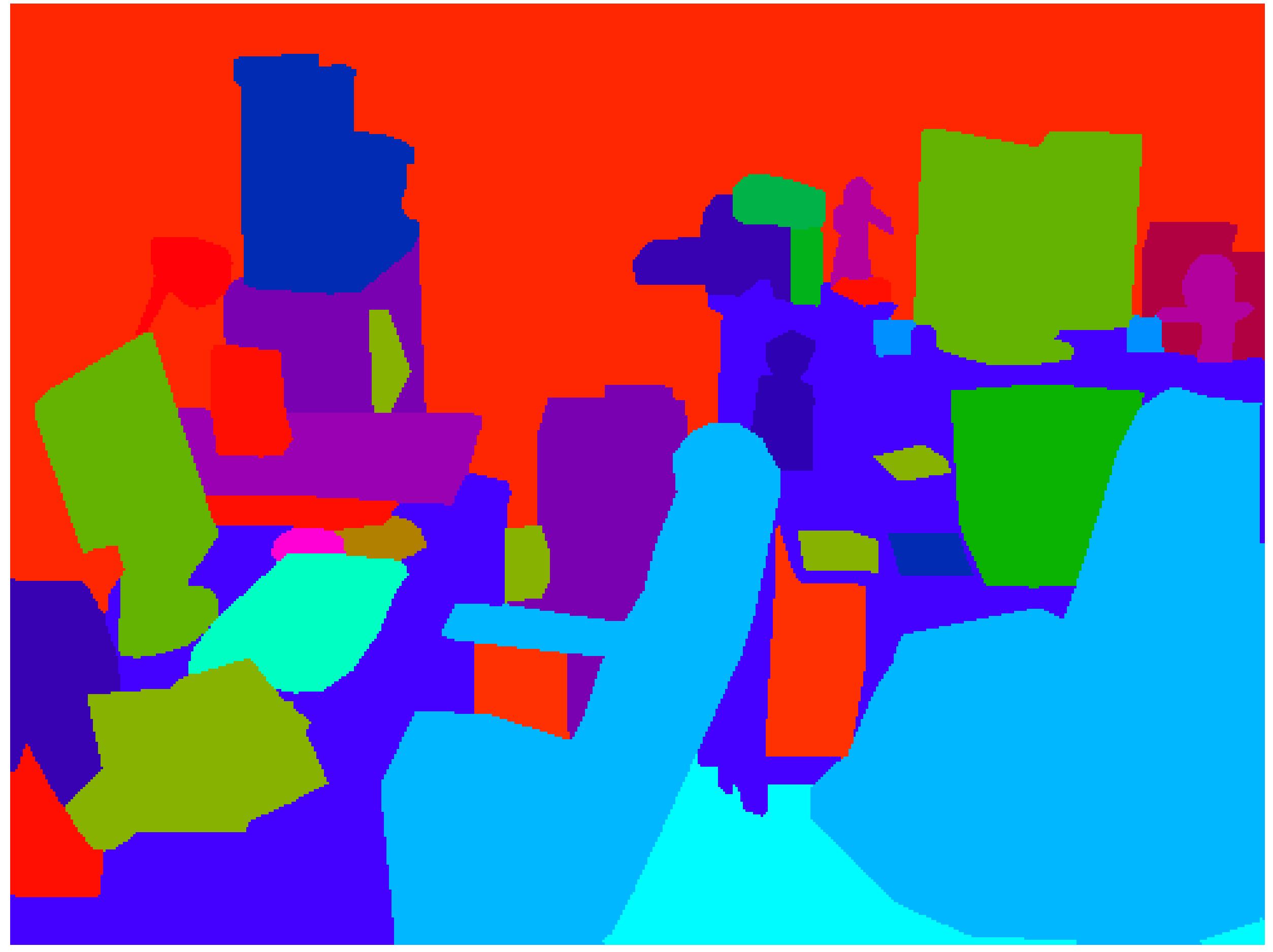

Sample of source dataset:

Raw image:

Annotation map:

Citation information:

@InProceedings{mottaghi_cvpr14,

author = {Roozbeh Mottaghi and Xianjie Chen and Xiaobai Liu and Nam-Gyu Cho and Seong-Whan Lee and Sanja Fidler and Raquel Urtasun and Alan Yuille},

title = {The Role of Context for Object Detection and Semantic Segmentation in the Wild},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2014},

}Licensing information:

NYUDv2

Data description:

The NYUDv2 dataset consists of video sequences from various indoor scenes. The data collection locations are very diverse, covering 464 indoor scenes in 3 cities. The NYUDv2 dataset contains 1,449 pairs of original images and data annotations, where the data annotations cover four tasks: semantic segmentation, depth estimation, and surface normal estimation. The training set, validation set, and test set contain 795, 327, and 327 samples respectively.

Dataset structure:

Amount of source data:

The dataset is divided into auxiliary training set (795), validation set (327), and test set (327).

Amount of test data:

All 327 test examples from the source dataset test set.

Data detail:

Raw image:RGB image

Annotation maps containing information such as semantics.

Sample of source dataset:

Raw image:

Annotation map:

Citation information:

@inproceedings{Silberman:ECCV12,

author = {Nathan Silberman, Derek Hoiem, Pushmeet Kohli and Rob Fergus},

title = {Indoor Segmentation and Support Inference from RGBD Images},

booktitle = {ECCV},

year = {2012}

}Licensing information:

Taskonomy

Data description:

The original Taskonomy dataset contains more than 4.6 million indoor scene images from 537 different buildings. Considering the evaluation efficiency of visual foundation models, two evaluation tasks, semantic segmentation and depth estimation, are selected. The training set, validation set, and test set of these tasks contain 3,785, 2,840, and 2,839 samples respectively.

Dataset structure:

Amount of source data:

The dataset is divided into auxiliary training set (3,785), validation set (2,840), and test set (2,839).

Amount of test data:

All 2,839 test examples from the source dataset test set.

Data detail:

Raw image:RGB image

Annotation maps containing information such as semantics.

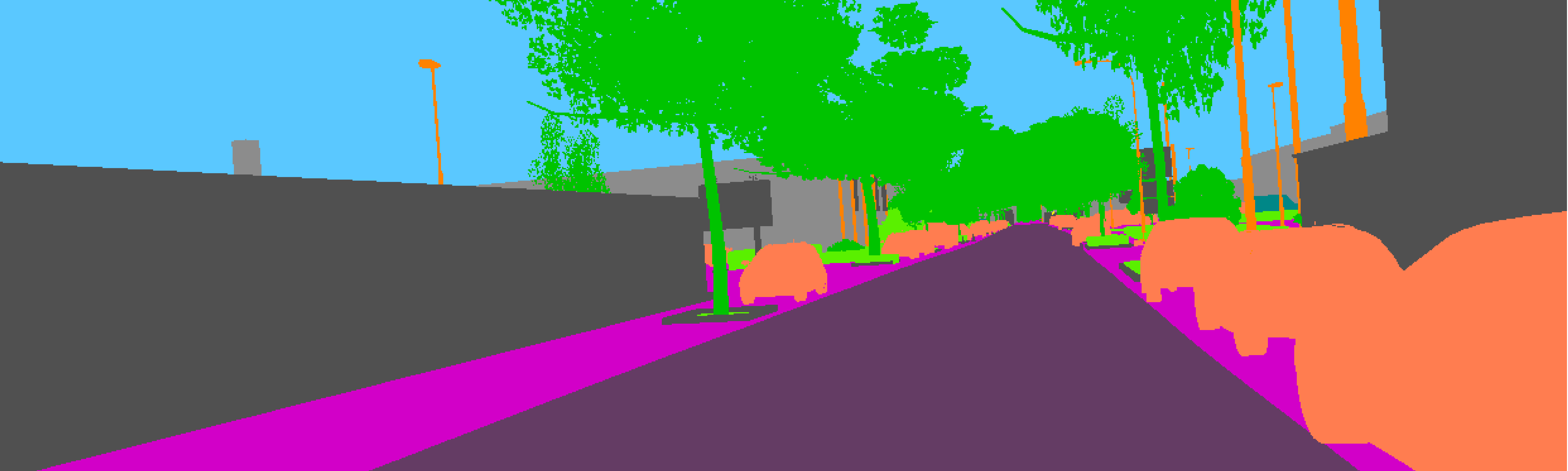

Sample of source dataset:

Raw image:

Annotation map:

Citation information:

@inproceedings{taskonomy2018,

title={Taskonomy: Disentangling Task Transfer Learning},

author={Amir R. Zamir and Alexander Sax and William B. Shen and Leonidas J. Guibas and Jitendra Malik and Silvio Savarese},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2018},

organization={IEEE},

}Licensing information:

VKITTI2

Data description:

The VKITTI2 dataset is a virtual dataset for autonomous driving scenarios. Through high-precision computer graphics technology, it simulates various driving scenarios such as urban areas, rural areas, and highways, as well as multiple weather conditions like sunny days, rainy days, and foggy days. The VKITTI2 dataset features high-level simulation, diversity, and detailed annotation information, and it has broad application prospects in the fields of autonomous driving and computer vision. Considering the evaluation efficiency of visual foundation models, two tasks, semantic segmentation and depth estimation, are selected. The training set, validation set, and test set of these tasks contain 8,510, 4,230, and 8,520 samples respectively.

Dataset structure:

Amount of source data:

The dataset is divided into auxiliary training set (3,785), validation set (2,840), and test set (2,839).

Amount of test data:

All 2,839 test examples from the source dataset test set.

Data detail:

Raw image:RGB image

Annotation maps containing information such as semantics.

Sample of source dataset:

Raw image:

Annotation map:

Citation information:

@misc{cabon2020vkitti2,

title={Virtual KITTI 2},

author={Cabon, Yohann and Murray, Naila and Humenberger, Martin},

year={2020},

eprint={2001.10773},

archivePrefix={arXiv},

primaryClass={cs.CV}

}Licensing information:

SUN RGB-D

#Metrics-mIoU,RMSE,mErr,MTL gain

Data description:

The SUN RGB-D dataset is a large-scale indoor scene dataset for scene understanding publicly released by Princeton University. It contains rich RGB-D (color and depth) image data of indoor scenes. The dataset consists of a total of 10,335 samples, and each sample includes a color image and depth information. Considering the evaluation efficiency of visual foundation models, two tasks, semantic segmentation and depth estimation, are selected. The training set, validation set, and test set of these tasks contain 2,665, 2,620, and 5,050 samples respectively.

Dataset structure:

Amount of source data:

The dataset is divided into auxiliary training set (2,665), validation set (2,620), and test set (5,050).

Amount of test data:

All 5,050 test examples from the source dataset test set.

Data detail:

Raw image:RGB image

Annotation maps containing information such as semantics.

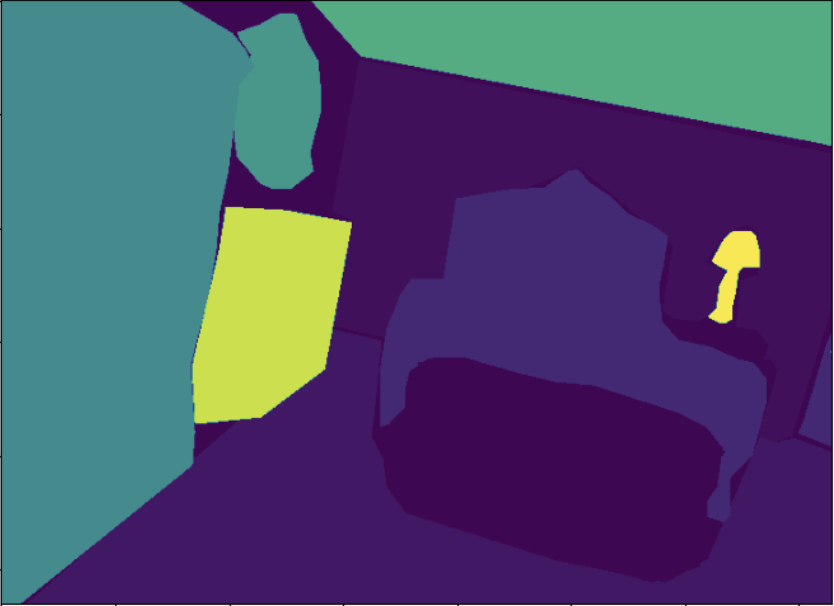

Sample of source dataset:

Raw image:

Annotation map:

Citation information:

@inproceedings{song2015sun,

title={Sun rgb-d: A rgb-d scene understanding benchmark suite},

author={Song, Shuran and Lichtenberg, Samuel P and Xiao, Jianxiong},

booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

pages={567--576},

year={2015}

}